Data and information are the new oil; who hasn’t heard this metaphor? Thus, protecting company data is – obviously – a management priority. The main challenge for CIOs, CISOs, data protection officers, and their security architects is to select and combine the right software solutions, patterns, and methodologies into a working, cost-effective architecture.

Access control, encryption, DLP/CABs, masking, anonymization, or pseudonymization – all can help secure sensitive data in the cloud. But how do these puzzle pieces fit together? And how do public clouds, such as AWS, support engineers and security professionals implementing such an architecture? Let’s dig in.

Access Control

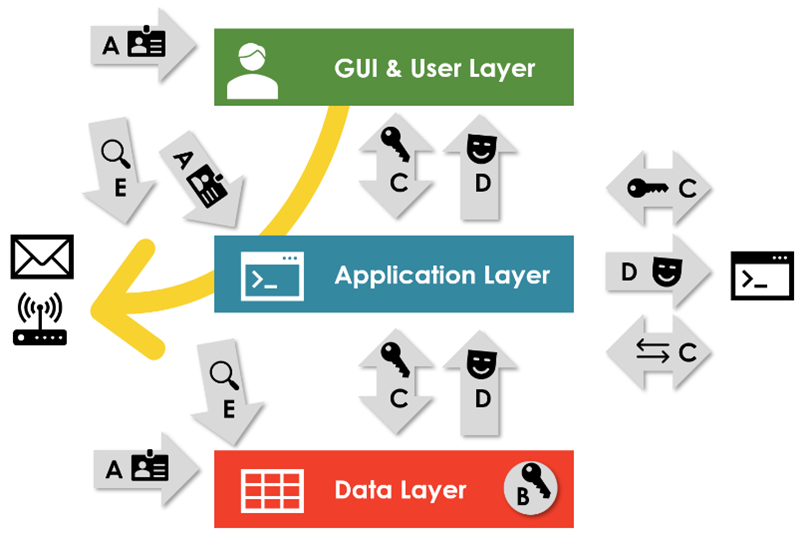

The first data protection puzzle piece is so apparent that engineers might even forget to mention it: access control (Figure 1, A). Following the need-to-know principle, only employees or technical accounts with an actual need should be able to access data. So, access to data should only be possible after someone explicitly granted access. Access control has two nuances: identity and access management (e.g., RBAC and LDAP) and network-layer connectivity (think firewalls).

Figure 1 -- How IT departments protect company data

Encryption

The second puzzle piece for data protection in the cloud is encryption. Encryption prevents the misuse of sensitive data by persons who, for technical reasons, can access data but have no need to understand and work with it. In other words: Data-at-rest encryption (Figure 1, B) addresses the risk of data leakage due to the loss of physical disks, as well as admins or hackers exfiltrating sensitive files. They might steal data, but the data is useless for them due to the encryption.

Data-in-transit encryption (Figure 1, C) secures data transfers between services and VMs within the company or with external partners. The aim: to prevent eavesdropping or manipulation of information when two applications or components exchange information.

The big cloud providers such as Microsoft’s Azure, Google’s GCP, and Amazon’s AWS have waived encryption and access control concepts in their services. They encrypt (nearly) all data they store, be it the data in GCP Bigtable, Azure’s CosmosDB, or AWS S3 object storage. It is hard to find a cloud service without default encryption to help secure sensitive data.

When looking at the example of an S3 bucket, AWS provides various configuration options for access control and encryption. First, engineers can configure connectivity, especially whether buckets and their data are accessible from the internet (Figure 2, 1). They can implement fine-granular user and role-based access policies for individual S3 buckets (2). Next, they can enforce HTTPS encryption for access (3) and define sophisticated encryption-at-rest options if the default encryption is insufficient (4).

Figure 2 -- AWS S3 Bucket basic access and encryption settings for securing sensitive data

When it comes to data protection in the cloud, security specialists and CISOs love access control and encryption. IT departments can and should enforce technical security baselines for them, ensuring a broad adoption of these patterns. The subsequent patterns of data masking, anonymization, and pseudonymization (Figure 1, D) differ.

Enforcing them within an organization is more of a challenge because they relate closer to application engineering. Cloud providers have helpful services, but their adequacy depends on an organization’s technology stack – and the company’s overall application engineering tooling methodology.

Masking, Anonymization, and Pseudonymization

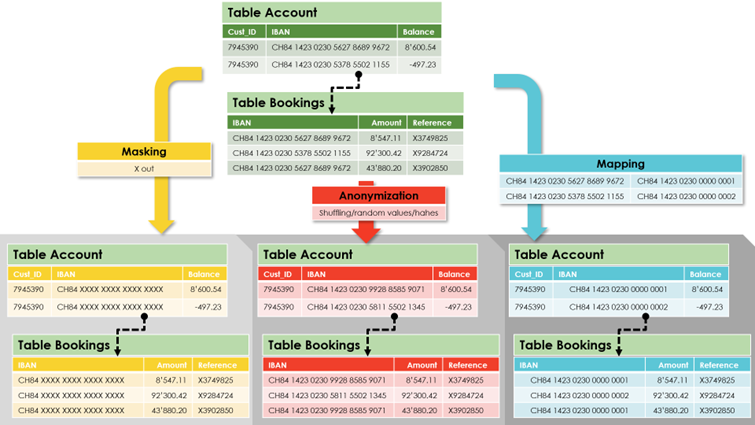

Masking, anonymization, and pseudonymization all hide sensitive data in databases, including personally identifiable information (PII) like social security numbers, IBAN account numbers, or private health data. The hiding, however, works differently:

- Masking zeroes or “exes out” sensitive data. For example, every IBAN becomes “XXXXXXXXXX.”

- Anonymization replaces sensitive data with pseudo-data. Anonymization is, by definition, irreversible but preserves relationships such as primary-foreign-key relations between database tables. Typical implementation variants are shuffling the data (e.g., assigning IBANs to different, “wrong” customers), substituting data with generated new data items, or hashing. Two points are critical. First, the consistency of the replacements, no matter where the data is. If the “old” IBAN is CH249028899406, occurrences in all tables and files become the new “anonymized” IBAN CH32903375, no matter where. Second, there must not be a mapping old-to-new. If such a table is necessary during the anonymization, the table must be deleted afterward to ensure irreversibility.

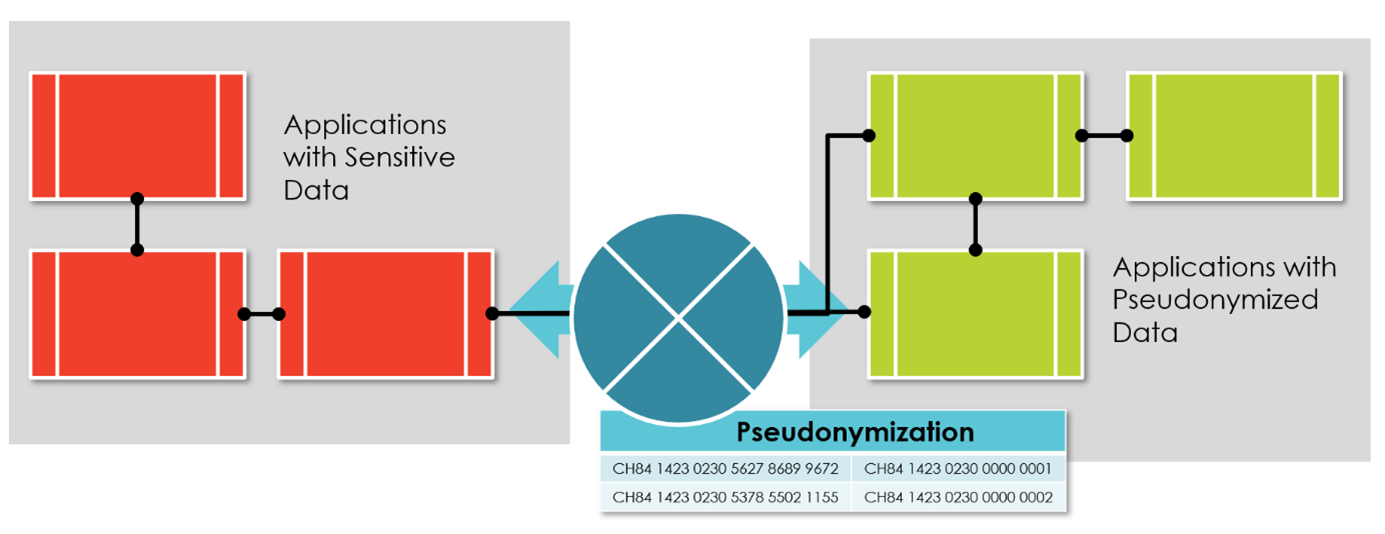

- Pseudonymization replaces sensitive data in specific systems and databases with tokens. Systems, engineers, and users seeing the tokens cannot reconstruct the original data; only a dedicated component can pseudonymize and de-pseudonymize data.

Figure 3 illustrates these concepts. On the left, IBANs are exed out; in the middle, IBANs are replaced with random values; to the right, artificial identifiers replace the original IBANs. A mapping table, to which engineers and users have no access, matches original and artificial identifiers.

Figure 3 -- Masking, anonymization, and pseudonymization all help secure sensitive data in the cloud

While technologically similar, these patterns for data protection in the cloud serve different use cases. Masking is an enabler for outsourcing or offshoring. It hides sensitive data without having to change the application by “just” masking some attributes in a database view. Also, it helps when copying production databases to test and development systems for engineering and quality assurance, at least for simple cases.

However, the standard pattern for test data is anonymization. Anonymization keeps inter-table dependencies, such as primary-foreign-key relationships. They are crucial for data-intense systems such as accounting software. Finally, pseudonymization makes sense when processing data so sensitive that hiding it in your complete application landscape is necessary. Dedicated components exchange original data and pseudonyms at defined entry and exit points to solution conglomerates processing only deidentified data (Figure 4).

Figure 4 -- The pseudonymization pattern

All big cloud providers support masking, anonymization, and pseudonymization to help secure sensitive data. The exact terminology and names differ, however. The AWS Data Migration Service or Static Data Masking for Azure SQL Database can transform data during a copy process (e.g., from production to test databases). For outsourcing and legacy applications, when just a few attributes on a GUI must be masked on the fly, solutions such as GCP BigQuery Dynamic Data Masking are better suited.

These examples illustrate that public clouds provide masking and anonymization features for selected data processing or storage PaaS services, but the features differ from cloud to cloud and even between services of the same cloud. The clouds do not, however, provide holistic masking and anonymization concepts or templates customers can directly apply to their enterprise cloud landscapes.

Data Loss Prevention and Cloud Access Security Broker

The final pieces of the cloud data protection puzzle are Data loss prevention (DLP), cloud access security brokers (CASB), and proxies (Figure 1, E). In contrast to access control, the focus with this group of tools and methodologies is not on who can access data. Instead, they help prevent data misuse and exfiltration by users with a legitimate need to see and work with the data.

Proxies are sledgehammer-style security: block URLs completely to prevent data transfers to cloud services such as DropBox. CASBs are the scalpel-style variant. They incorporate context information when deciding whether to allow or block traffic (e.g., by considering the concrete user). Network DLP looks into traffic from laptops or servers to the cloud. In the workplace area, email DLP inspects outbound mail.

All tools controlling outbound traffic are popular in the workplace area to prevent business users or engineers working on laptops from exfiltrating data. In the cloud, they are an essential piece as well. They can stop engineers and admins working with cloud resources from exfiltrating data from cloud VMs or cloud storage and database PaaS services to the internet.

To conclude: Various decade-old pre-cloud-era methods, as well as new features and tools in the public cloud (Table 1, above) help companies to secure sensitive data. Putting these cloud services and patterns together is the big puzzle named cloud security architecture. It is and continues to be a creative and complex art.

.png)